CEPH: A distributed storage solution

Léo Le Taro - M2 RTS - Université Claude Bernard Lyon 1

Introduction

In today's world, one can find numerous cloud storage providers such

as Apple iCloud, Dropbox or Google Drive. Such Software as a Service

(SaaS) solutions are rising in popularity because they allow users

to access their data remotely, whenever they are, and provide

some protection against hardware failure.

However, such approaches might not be suitable for medium-sized and

big institutions that have specific constraints in terms of

security and responsibility. As an example, the University of Lyon 1

requires that all data remains hosted within its own information system.

CEPH is a distributed, multi-layer storage architecture. Free-software,

licensed under GNU LGPL terms,

it aims at offering different storage paradigms, such as block,

object and file system storage, in a transparent manner.

The underlying mechanisms that actually store the data are distributed

among multiple hosts within a cluster. CEPH tries to implement efficient

load-balancing strategies to prevent hot spots, i.e. nodes that

suffer heavier loads than others based on the nature of the data they

store. In addition, CEPH advertises reliability, enabling nodes to fail

without compromising data integrity and accessibility.

Architecture

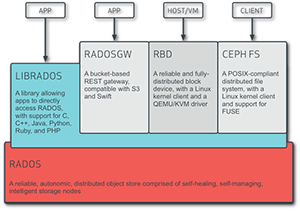

Overview of CEPH Layered Architecture

RADOS

CEPH RADOS is the entity that manages the data storage as well as replication among the cluster nodes. It consists of two daemons: the CEPH OSD Daemon that runs on each storage node and handles the storage operations, and the CEPH Monitor that keeps track of the cluster state, i.e. the map of active nodes and their roles. Note that RADOS allows scalability in the way that nodes can be hot-plugged into a cluster without further manipulations required.

RADOS offers the possibility to divide the logical storage area into

different pools, and allows pool-based access control mechanisms.

Such pools are not contiguous storage space but rather a logical

discrimination of data. Objects belonging to the same pool may thus

be evenly distributed among different nodes.

RADOS automatically monitors usage statistics in order to perform load-balancing between the nodes composing the cluster, in order to prevent hot spots from rising.

LibRADOS

LibRADOS is the middleware: a set of APIs that allow user applications

written in C, C++, Python, PHP, Ruby and Java to directly access

RADOS storage. Higher-level daemons that map RADOS storage with

object, block and file system storage are built upon LibRADOS.

Modular, LibRADOS actually consists of one API per target programming

language.

Although typical CEPH users will rely on such high-level access

ways, one may choose to directly hit RADOS storage through

LibRADOS.

High-level utilities

CEPH offers high-level APIs for working with RADOS storage.

Since all APIs serve different purposes, they are namespaced and

work on different RADOS pools: one cannot directly write data

with one API and read it from another.

Object storage: RADOSGW

RADOSGW is a RESTful gateway, implemented as an HTTP web service, that allows data manipulation as object storage.

RADOSGW directly interfaces with Amazon S3 as well as OpenStack Swift APIs.

Block device: RBD

CEPH offers an interface that maps RADOS storage to a virtual

resizable block device that can be accessed from a Linux client.

It is also compatible with Kernel Virtual Machines.

Data stored in such a block device will be evenly distributed

and replicated among storage nodes thanks to RADOS.

Most of today's virtual cloud technologies like CloudStack, OpenStack and OpenNebula support RBD so that administrators can build virtual machines on top of RBD storage.

File system storage: CephFS

CephFS offers POSIX-compatible file system storage that can be mounted through a client, which is now embedded into recent Linux distros. Directories, file names and metadata are mapped into CEPH object storage. There is also a client daemon that will integrate with FUSE to permit mounting CephFS into userspace.

In order to design a CEPH file system, one must run a cluster featuring at least one node with the special role of Metadata Server.

Benchmark Results

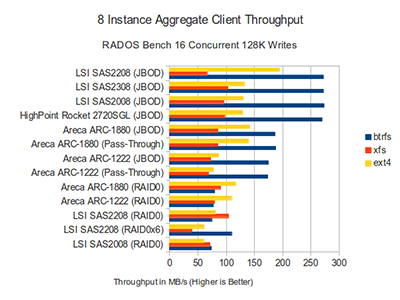

Many users in the CEPH community have tried to optimize CephFS

configuration and ran benchmarks using RAID0 arrays of hard drives

and SSDs to assess the performance of such a distributed storage solution,

compared to traditional ext4 and xfs file systems.

They found that in most cases, CEPH did pretty well, excelling

when it comes to many concurrent, medium-sized I/O operations.

However, they noted that CPU utilization was much higher on clients using CephFS, which is not surprising since CephFS clients have to handle network transactions and more complicated checks.

128KB RADOS Bench Results

Limitations of CEPH

- There is no Windows-compatible user client.

- No way to easily change the RADOS pool to which a piece of data belongs.

- CephFS does not support dynamic partitioning.

Conclusion

CEPH is a well-designed, extensive solution to allow information system administrators to build a solid, distributed architecture that offers high-performance, high-reliability, authenticated storage accessible through multiple APIs and access points.